Cloud Resume Challenge Part 4/4 - DevOps

Table of Contents

Introduction #

This post is the last part in as series of my story on the Cloud Resume Challenge. Below is the link for the other posts.

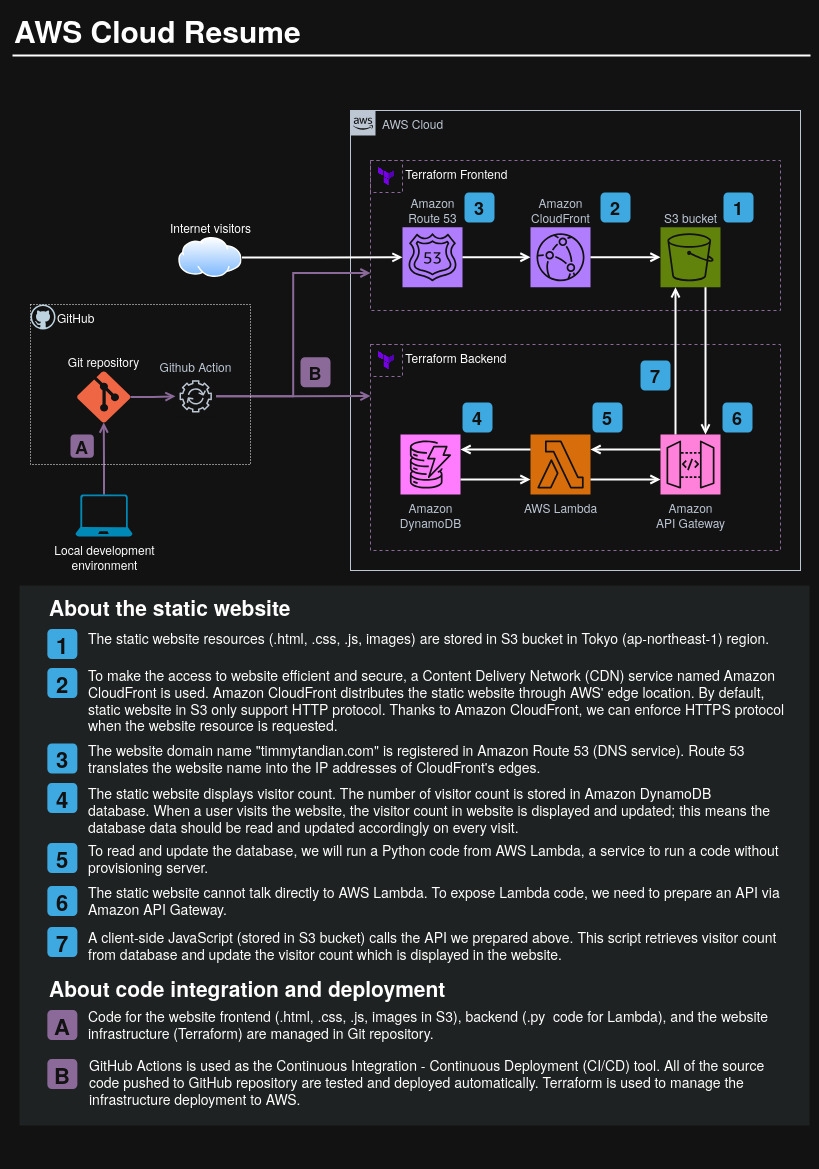

System Diagram #

Maybe it is not as relevant as in the post about front-end or back-end, but for your reference I would like to show you the overall picture of the Cloud Resume system here.

Overview of DevOps practice in this project #

Traditionally, DevOps practices focus on bridging the collaboration between software developer team and IT operation team, with the goal of enhancing software delivery quality and speed. The DevOps practices usually include the heavy use of version control system, continuous integration (CI) & continuous delivery (CD), agile project management, or a culture of close collaboration and communication.

In this relatively small project, where I am the sole project member, there are some DevOps practices that I had chance using. Below are my brief explanation of them to make sure we are on the same page. Feel free skipping to the next section if you are familiar with these already.

Source Control #

This is the act of managing and storing source code in a version control system like git and GitHub. When working with a group of contributors, each contributor can work on a branch of the main code without affecting the main branch itself. With this practice, we can also ensure the historical change of our software is trackable, allowing us to revert back to previous version if necessary.

Continuous Integration (CI) & Continuous Delivery (CD) #

CI is the process of integrating code change into main branch, and automating software testing and build. CD is the process of automating software packaging and its deployment to target machines.

Some central repository systems like Github or Gitlab have build-in CI/CD tool, e.g. Github Action or Gitlab CI/CD. Even though using Github or Gitlab, we can also have options to use third party tool like Jenkins.

Testing #

Software testing is an activity of making sure the software we develop run as intended in term of functionality or appearance. The scope of software testing can be as small as validating a single function, or grow even wider to validate the integration and interaction between module. In software testing world, verifying a single unit of code (usually a function block) is called unit testing.

Infrastructure as Code (IaC) #

Infrastructure as Code (IaC) is a practice that define and manage IT infrastructure as code, where the code is usually managed in version control system. By executing the code, we can automatically provision IT infrastructure (e.g. EC2 server, DNS service, etc.). Another benefit is, we can track how our infrastructure change by examining the source code from version control system.

How these practices are relevant in the Cloud Resume project #

I have briefly introduced the concept of some DevOps practices. Now let’s review how these practices are being utilized in the project.

Source Control #

Source control is the heart of this Cloud Resume project. All source codes used to build the front-end, back-end, testing, defining IaC are stored in centralized repository. Also, to make the automation with CI/CD works, having our source code stored in one centralized place is paramount.

Continuous Integration (CI) & Continuous Delivery (CD) #

In this project, I used CI to automate software testing. If somehow the software testing failed (e.g. there is a bug in my code), we can automatically cancel the software delivery. If the test is successful, we can pack the app/resources and deploy them using IaC to AWS, e.g. delivering .html, .css, .js to S3 bucket, or updating python code in Lambda.

This CI/CD practice is powerful because now I can automate the delivery. Automation means less possibility of having human error. Please imagine, suppose I want to add a new certification achievement in my cloud resume. Without CI/CD, after changing the .html code I have to manually test the code, open AWS console, click through the GUI to reach S3 management window, then finally upload the new .html file into the S3 bucket. In contrast, with just git commit and git push and Pull Request (PR), the CI/CD pipeline does the test and publishing of the updated .html file straight to AWS S3 automatically. One push and done - how powerful!

Testing #

Software testing is a huge and deep topic. There is even a specialist/job role to handle this field. However, software testing is not the main focus of my cloud resume project, so in this small project I did the software testing as following.

| Part of code | System Area | Type of test |

|---|---|---|

| Python to interact with DynamoDB (storing website visitor count) | Back-end | Unit test |

| JavaScript to show the number of visitor count on the webpage | Front-end | Unit test |

| HTML code that structures the cloud resume webpage | Front-end | Static analysis (linting) |

As you see in the table above, mostly I focus the software testing on unit-test because of the small scale of this project. For HTML code, I did static analysis (linting), making sure the HTML code doesn’t have any typo or syntax error. The static analysis is different than the usual software testing, because we only analyze the code statically (without executing it on any runtime). I included the static analysis in the testing part because I would cancel the CI/CD pipeline processing if the static analysis failed.

Infrastructure as Code (IaC) #

Besides automating infrastructure deployment and improving the visibility of infrastructure configuration & change, I adore IaC because it can destroy and rebuild my cloud resources easily and quickly. My love for IaC bloomed on one occasion when I decided to change the cloud resume domain name from timmytandian.com to resume.timmytandian.com. If not using IaC, I have to manually change the custom domain name from AWS console for each resource one by one. Changing manually from the AWS console is not only more prone to human error, but also introduces mental overhead because I need to keep thinking the right order of resource when initiating the domain name change.

Fortunately, in IaC we can define a resource’s domain name using a variable. By simply changing the variable’s value, with a few Terraform commands I could rebuild my entire cloud resume with a new domain name in just 5-10 minutes. So sweet!!!

Implementation Details #

Source Control #

When working on the source control for this project, there are two big decisions I had to make.

- Which version control system should I use? Github, Gitlab, other self-host service like Gitea?

- Should I make a large mono-repo? Or, should I divide the code into 2 repositories (front-end & back-end)?

Let’s talk about each of them.

Decision 1: Version Control System #

I decided to use the combination of Git and Github because I already have had some experience working with those tools. In case you, other fellow of Cloud Resume Challengers, are still undecided about which version control system, beside your prior experience with the tool, you can also consider their integration with CI/CD tool. I heard a lot of good reviews about Gitlab CI/CD tool, maybe you might like checking Gitlab as well. Nevertheless, in my opinion choosing either Github or Gitlab won’t be differ that much from technical perspective.

Decision 2: Mono-Repo or Multi-Repo #

When working on this project, I thought having a separate repository for front-end and back-end is good because of better isolation. Indeed it’s true. Having two separate repositories for front-end and back-end works pretty well in this project for most of the time.

However, there was one time when I had to scratch my head because of the multi-repo structure. When I worked on the IaC (Terraform), it turned out that some of the back-end resources need to refer to resources in the front-end. Separating the repository makes referencing front-end resources not straightforward from back-end resources which live in different repository. As a workaround, I had to refer the resource name that lives in front-end repository by hard-coding the resource name in the back-end configuration.

In retrospective, having a mono-repo makes more sense. If I could redo this project, I would make a mono repo, bringing the front-end and back-end source codes together. Although I had a small regret when making this decision, overall this is not a big deal.

Continuous Integration (CI) & Continuous Delivery (CD) #

As I host the project source code in Github, I use Github Action to automate the CI & CD. There is nothing special in the CI/CD implementation itself, you can check out the code in the Github repository (front-end workflow, back-end workflow). For your convenience, here is the flowchart of the CI/CD pipeline.

Front-end CI/CD workflow #

git checkout]

B --> C[Setup Node.js]

C --> D[Install dependencies forfront-end testing] D --> E[Run HTML linting and

JavaScript code testing] E --> F{Code testing

passed?} F -->|No| G[End - Fix issues] F -->|Yes| H[Deployment Phase] H --> I[Run

terraform init]

I --> J[Run terraform plan]

J --> K{Branch is devor

prod?}

K -->|Yes| L[Run terraform applyto respective environment] K -->|No| M[Skip

terraform applyEnd process] L --> N[Deployment Complete] style A fill:#e1f5fe style H fill:#e8f5e8 style F fill:#fff3e0 style K fill:#fff3e0 style G fill:#ffebee style M fill:#f3e5f5 style N fill:#e8f5e8

Back-end CI/CD workflow #

git checkout]

B --> C[Setup Python with Poetry]

C --> D[Install dependencies forback-end testing] D --> E[Run Python code testing] E --> F{Code testing

passed?} F -->|No| G[End - Fix issues] F -->|Yes| H[Deployment Phase] H --> I[Run

terraform init]

I --> J[Run terraform plan]

J --> K{Branch is devor

prod?}

K -->|Yes| L[Run terraform applyto respective environment] K -->|No| M[Skip

terraform applyEnd process] L --> N[Deployment Complete] style A fill:#e1f5fe style H fill:#e8f5e8 style F fill:#fff3e0 style K fill:#fff3e0 style G fill:#ffebee style M fill:#f3e5f5 style N fill:#e8f5e8

About deploying directly to AWS using AWS CLI #

Before I implemented the IaC (Terraform) to automate deployment, deployment is made by uploading some files to AWS using AWS CLI, which is executed in the Github Action. For example, code below is the job that I used to upload front-end artifact (a .zip file containing static web files like .html, .css, .js) to AWS S3.

DeployCodeToS3:

if: github.repository == 'timmytandian/remove-me-to-execute-this-job'

runs-on: ubuntu-latest

needs: Test-and-Build

steps:

#----------------------------------------------

# Artifact download, publish to AWS Lambda Layer

#----------------------------------------------

- name: Download the static web artifact

uses: actions/download-artifact@v4

with:

name: static-web-resources

- name: Setup AWS CLI

uses: aws-actions/configure-aws-credentials@v4

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ap-northeast-1

- name: Upload web resources to S3

run: |

aws s3 sync . s3://${{ vars.S3_BUCKET }} --delete

- name: Job status report

run: echo "🍏 This job's status is ${{ job.status }}."

After I implemented IaC, I commented out the Github Action job above because the logic of deployment is handled from within the Terraform code, i.e. from the CI/CD pipeline I execute terraform apply command.

Testing #

Back-end Python Test #

Because the essence of back-end python code is to read and update value from DynamoDB, we need a way to test the functionality of read/update our DynamoDB database without invoking the real database. To do this, we can mock the DynamoDB with a package called aws_lambda_powertools. With this package, we can create a dummy AWS resource that can behave similarly to the real one (the mock AWS resource exists conceptually in the python code, enabling us test the code logic.

You can see the unit test code to validate DynamoDB visitor count operation in this link

Front-end JavaScript Test #

async function getVisitorCount(){

// define the API endpoint

const apiEndpoint = new URL("https://3ijz5acnoe.execute-api.ap-northeast-1.amazonaws.com/counts/6632d5b4-5655-4c48-b7b6-071d5823cfunc=addOneVisitorCount");

// fetch the data from the database

try {

const apiResponse = await fetch(apiEndpoint, {

method: "GET",

});

if (!apiResponse.ok) {

let errorTitle = `Fetch API response not OK (status ${apiResponse.status})`

throw new Error(errorTitle);

}

// set the value to output variable

const data = await apiResponse.json();

return data;

}

catch(error) {

// log the error to console

console.error(error);

throw error

}

}

Please consider the JavaScript code above. In essence, the logic of front-end JavaScript code is to call the AWS API Gateway endpoint using the fetch API.

To test this functionality, I used jest to mock this API calling without calling the real AWS API Gateway endpoint. Below is the test script.

import 'isomorphic-fetch'; // fetch API may not be implemented yet in the test environment, so import this

import { getVisitorCount } from "../src/js/index.js";

// This is the section where we mock `fetch` API

const fetchMock = jest

.spyOn(global, 'fetch')

.mockImplementation(() =>

Promise.resolve({

json: () => Promise.resolve(229),

ok: true

}));

// This is actual testing suite

describe('index.js testing', () => {

test('getVisitorCount function', async () => {

const visitorCount = await getVisitorCount(); // getVisitorCount contains fetch API

expect(visitorCount).toEqual(229);

expect(fetchMock).toHaveBeenCalledTimes(1);

const fetchMockUrl = fetchMock.mock.calls[0][0].href;

expect(fetchMockUrl).toBe("https://3ijz5acnoe.execute-api.ap-northeast-1.amazonaws.com/counts/6632d5b4-5655-4c48-b7b6-071d5823c888?func=addOneVisitorCount");

});

});

In the code snippet above, I made three assertions:

- Calling

getVisitorCountfunction returns a number 229 (which I defined in the mock function). - The

getVisitorCountfunction is called 1x. - The fetch API in JavaScript calls the correct AWS API Gateway endpoint.

HTML linting #

The code to lint HTML file is very simple because all of the heavy lifting is being done by the gulp-htmlhint package. In the code below, I use htmlhint() function to lint the src/index.html file and made the script to return error if the linting found any problem.

import gulp from "gulp";

import htmlhint from 'gulp-htmlhint';

// Validate the HTML

gulp.task('html', function(){

return gulp.src('src/index.html')

.pipe(htmlhint())

.pipe(htmlhint.failAfterError());

});

// Define the default task

gulp.task("default", gulp.series("html"));

Infrastructure as Code (IaC) #

When implementing the IaC, there are several issues in which I put extra consideration.

- Issue 1: dev and prod environment

- Issue 2: folder structure

- Issue 3: resource import into Terraform

- Issue 4: Terraform best practices

Let’s see each of them one by one.

Issue 1. Dev and Prod environment #

When I start developing the IaC, I already had my cloud resume live. To make sure I have the smallest amount of downtime/error when developing the IaC, I decided to create two environments where I deploy the infrastructure: dev and prod. The dev environment is where I write and test IaC initial code. This dev environment is designed to closely mimics the prod environment, where I have the main cloud resume deployed to the world. The separation of dev and prod environment allows me to test the IaC before deployment, ensuring quality and stability.

Issue 2: Folder Structure #

“If want to have separate environment for development and production, how should I arrange the project folder structure? Should I manage the development stage in branch or in different repository?” This is the main question I asked when thinking the folder structure for this project.

Designing a scalable and maintainable folder structure is crucial, especially when managing multiple environments like dev and prod. I wanted a structure that makes environment separation clear while supporting modular growth in the future.

After some research and trial, I settled on the following folder layout:

terraform/

├── dev/

│ └── main.tf

│ └── outputs.tf

│ └── terraform.tf

│ └── variables.tf

├── prod/

│ └── backend.tf

│ └── main.tf

│ └── outputs.tf

│ └── terraform.tf

│ └── variables.tf

└── modules/

└── api_gateway/

│ └── main.tf

│ └── outputs.tf

│ └── terraform.tf

│ └── variables.tf

└── dynamodb/

│ └── main.tf

│ └── outputs.tf

│ └── terraform.tf

│ └── variables.tf

└── lambda/

└── main.tf

└── outputs.tf

└── terraform.tf

└── variables.tf

In this structure:

- Beside separating the folder, I separated the dev and prod configuration into two separate git branches. The

dev/andprod/each contain their ownmain.tf, making it easy to manage and test environments separately. - The

modules/directory holds reusable components, like Lambda functions or DynamoDB configurations. This promotes DRY principles and makes future scaling easier. - The

backend.tffile is shared across environments and defines remote state configuration. I used AWS S3 bucket as the place to store remote state configuration .

Issue 3. Resource import into Terraform #

Before I started the IaC, I already had the timmytandian.com cloud resume implemented using AWS cloud console. Because in the future I want to use Terraform to manage the timmytandian.com cloud resume, I had two options:

- Delete all resources that I have created from the AWS cloud console GUI, then recreate them using Terraform.

- We can use the

importblock in Terraform to move resource management from AWS cloud console GUI into Terraform.

Option 1 is good if we want to start this project from a clean sheet, while option 2 is better if we want to shift resource management without introducing any downtime. In the end, I chose option 2.

Below is the sample code to import an AWS IAM Role named “dynamodb-query-myresumevisitors-role-e5kax67j” into a Terraform module “lambda”. The import block below only need to be applied once (run terraform apply with configuration below 1x then comment out the code).

# Use the block below to import the resource from AWS

# IMPORTANT: after the import procedure finished, the code below should be commented out.

import {

to = module.lambda.aws_iam_role.lambda_code

id = "dynamodb-query-myresumevisitors-role-e5kax67j"

}

Issue 4. Terraform best practices #

To ensure maintainability and scalability of my infrastructure as it grows, I applied several Terraform best practices:

- Use Modules: I created reusable modules for major features like Lambda functions, IAM roles, and DynamoDB. This allows me to reuse the same code for both dev and prod environments with different variable inputs.

- Remote State Management: I configured a remote backend using an S3 bucket with state locking enabled via DynamoDB. This helps prevent state corruption and makes collaboration or automation safe and reliable.

- Keep Secrets Out of Code: Any sensitive values (like API keys or secrets) are passed in securely using environment variables or secret managers, never hardcoded in Terraform files.

- Automated Formatting and Validation: I always run

terraform fmtandterraform validatefrom the Github Action to ensure clean and error-free code. - Assign value to a variable. Instead of hard-coding some names or identifiers, I always try to assign those names or identifiers to Terraform variable (not only input/output variables, but also local values). With this, changing some system parameters is easy and efficient, e.g. changing my cloud resume domain name from timmytandian.com to resume.timmytandian.com.

These best practices ensure that my Terraform configuration remains clean, secure, and maintainable as the project evolves.

Conclusion #

As this Cloud Resume Challenge journey comes to a close, I’d like to wrap up by highlighting some key DevOps decisions I made throughout the project:

- Source Control: I managed two separate GitHub repositories — one for the front-end code and another for the back-end code. Although this separation provides good clarity, I would prefer to have a mono-repo structure for better integrity and maintainability.

- CI/CD Pipeline: I used GitHub Actions to implement automated pipelines, enabling consistent testing and deployment workflows for both front-end and back-end components.

- Testing Strategy: I incorporated multiple levels of testing to ensure stability and quality:

- For the Python back-end, I used AWS Lambda Powertools to mock DynamoDB and perform unit tests effectively.

- For the JavaScript front-end, I used Jest to mock API calls without triggering the actual AWS API Gateway endpoint.

- I also added linting for the HTML code to ensure consistent formatting and detect common errors early.

- Infrastructure as Code (IaC): I used Terraform to manage all infrastructure, clearly separating it into two environments: dev for staging and testing, and prod for the live deployment.

While I’ve done my best to document the major parts of this project, there’s still so much that happened behind the scenes — countless hours troubleshooting errors, reading documentation, and evaluating architecture choices. While the cloud resume seems like a simple static resume site on the surface, it can quickly grow into a complex, flexible, and educational DevOps project depending on how deep we want to go.

If you’re aspiring to become a cloud engineer, I highly recommend taking on this challenge. It’s more than just a portfolio piece — it’s a real-world learning experience that ties together cloud, infrastructure, automation, and software engineering. I hope this post gave you some useful insights and inspiration.